Blog posts

Cockpit 0.81 Released

Cockpit releases every week. This week it was 0.81

NTP servers

Cockpit now allows configuration of which NTP servers are used for time syncing. This configuration is possible when timesyncd is being used as the NTP service.

Network switch regression

There was a regression in Cockpit 0.78 where certain on/off switches in the networking configuration stopped working. This has now been fixed.

Delete Openshift routes and deployment configs

In the Kubernetes cluster dashboard, it’s now possible to delete Openshift style routes and deployment configs.

From the future

I’ve refactored the Cockpit Kubernetes container terminal widget for use by other projects. It’s been integrated into the Openshift Web Console for starters. This widget will be used by Cockpit soon.

Try it out

Cockpit 0.81 is available now:

Cockpit 0.80 Released

Cockpit releases every week. This week it was 0.80

SSH private keys

You can now use Cockpit to load SSH private keys into the ssh-agent that’s running in the Cockpit login session. These keys are used to authenticate against other systems when they are added to the dashboard. Cockpit also supports inspecting and changing the passwords for SSH private keys.

Always start an SSH agent

Cockpit now always starts an SSH agent in the Cockpit login session. Previously this would happen if the correct PAM modules were loaded.

From the future

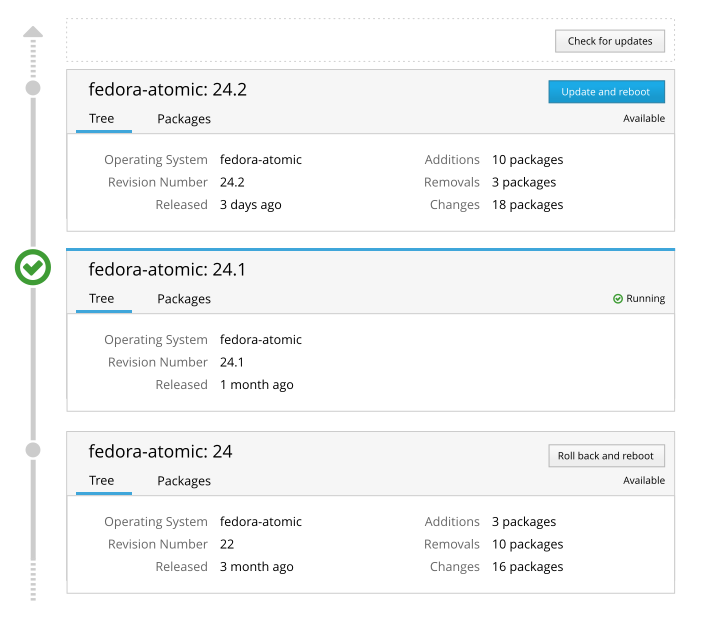

Peter has done work to build an OSTree UI, useful for upgrading and rolling back the operating system on Atomic Host:

Subin has done work to get Nulecule Kubernetes applications working with Atomic and larger Kubernetes clusters.

Try it out

Cockpit 0.80 is available now:

Using Vagrant to Develop Cockpit

Starting with Cockpit release 0.79 you can use Vagrant to bring up a VM in which you can test or develop Cockpit. The VM is isolated from your main system so any system configuration you change via Cockpit will only happen in the VM.

The Vagrant VM mounts the Cockpit package assets from your git repository checkout, so when you make on the host system, you can refresh the browser and immediately see the resulting changes. For changes to C code, the Cockpit binaries would have to be rebuilt and testing via Vagrant won’t work.

Getting Started

To start, you’ll need Vagrant. On Fedora I use vagrant-libvirt. In addition keep in mind that on

vagrant-libvirt requires root privileges, so you’ll need to use vagrant with sudo.

$ sudo yum install vagrant vagrant-libvirt

Next, in a copy of the Cockpit git repository, you run vagrant up:

$ git clone https://github.com/cockpit-project/cockpit

$ cd cockpit

$ sudo vagrant up

The first time this runs it’ll take a while, but eventually you’ll have a Vagrant VM running. When you do this step again, it should be pretty fast.

The VM will

listen for connections on your local machine’s http://localhost:9090, but

even though you’re connecting to localhost it’ll be Cockpit in the VM you’re talking to.

If you already have Cockpit running on your local machine, then this won’t work, and you’ll need to use the IP address of the VM instead of localhost. To find it:

$ sudo vagrant ssh-config

Two user accounts are created in the VM, and you can use either one to log into Cockpit:

- User: “admin” Password: “foobar”

- User: “root” Password: “foobar”

Testing a Pull Request

If there’s a Cockpit pull request that you’d like to test, you can now do that with the Vagrant VM. Replace the 0000 in the following command with the number of the pull request:

$ git fetch origin pull/0000/head

$ git checkout FETCH_HEAD

The pull request can only contain code to Cockpit package assets. If it contains changes to the src/

directory, then the pull request involves rebuilding binaries, and testing it via Vagrant won’t work.

Now refresh your browser, or if necessary, login again. You should see the changes in the pull request reflected in Cockpit.

Making a change

You can make a change to Cockpit while testing that out in your Vagrant VM. The changes should be

to Cockpit package assets. If you change something in the src/ directory, then binaries will have

to be rebuilt, and testing it via Vagrant won’t work.

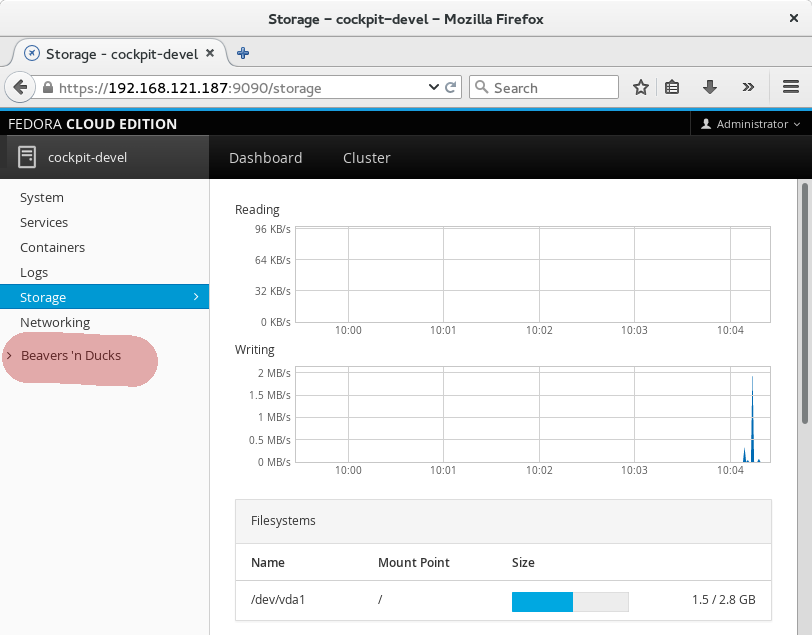

I chose change some wording in the sidebar in pkg/shell/index.html:

<a data-toggle="collapse" data-target="#tools-panel" class="collapsed" translatable="yes">

- Tools

+ Beavers 'n Ducks

</a>

And after refreshing Cockpit, I can see that change:

The same applies to javascript or CSS changes as well. In order to actually contribute a change to Cockpit you’ll want to look at the information about Contributing and if you need help understanding how to add a plugin package you can look at the Developer Guide.

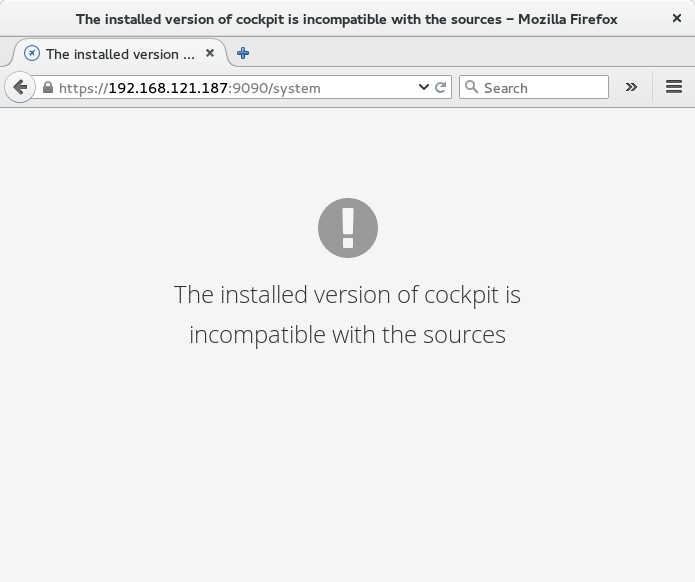

Bringing the Vagrant VM in sync

After each Cockpit release, there will be new binary parts to Cockpit. In order to continue to use the Vagrant VM, you’ll need to rebuild it. A message like this should appear when that’s necessary.

Rebuild the Vagrant VM like this:

$ sudo vagrant destroy

$ sudo vagrant up

Troubleshooting

On Fedora, FirewallD got in the way of Vagrants use of NFS. On my machine, I had to do this to get it to work:

```text $ sudo firewall-cmd –set-default-zone=trusted

Cockpit 0.79 Released

Cockpit releases every week. This week it was 0.79

Vagrant File

Cockpit now has a vagrant file. If you want to checkout the latest Cockpit, test pull requests, or hack on Cockpit, you can:

$ sudo vagrant up

... in a Cockpit git repo. There's a

[tutorial with more information](http://cockpit-project.org/blog/cockpit-vagrantfile.html)

Testing with libvirt

Dominik migrated the Cockpit integration tests to run on libvirt, rather than using Qemu directly. This makes the tests more portable, and is an important step towards running them distributed.

From the future

Marius has done some work on configuring NTP servers. Hopefully this will be in a release soon:

Try it out

Cockpit 0.79 is available now:

Cockpit 0.78 Released

Cockpit releases every week. This week it was 0.78

Cockpit 0.77 Released

Cockpit releases every week. This week it was 0.77

Componentizing Cockpit

Marius and Stef completed a long running refactoring task of splitting Cockpit into components.

In an age long gone Cockpit used to be one monolithic piece of HTML and javascript. Over the last year we’ve steadily refactored to split this out so various components can be loaded optionally and/or from different servers depending on capabilities and operating system versions.

Marius also removed a cockpitd DBus service that we’ve also been moving away from. Cockpit wants to talk to system APIs and not install its own API wrappers like cockpitd.

The URLs changed

Because of the above, we unfortunately had to change the URLs. But we’ve taken the opportunity to make them a lot simpler and cleaner.

Authentication when Embedding Cockpit

Stef worked on partitioning the cockpit authentication so that embedders of Cockpit components don’t need to share authentication state with a normal instance of Cockpit loaded in a browser.

Deleting and Adjusting Kubernetes Objects

Subin implemented deletion kubernetes objects, and adjust things like the number of replicas in Replication Controllers.

Warning when too many machines

Cockpit now gives a warning when adding “too many” machines to the dashboard. We’ve set the warning to 20 machines, but various operating systems can set this warning to be lower.

From the Future

Andreas did designs for managing the SSH keys loaded for use when connecting to machines:

Try it out

Cockpit 0.77 is available now here:

Making REST calls from Javascript in Cockpit

Note: This post has been updated for changes in Cockpit 0.90 and later.

Cockpit is a user interface for servers. In earlier tutorials there’s a guide on how to add components to Cockpit.

Not all of the system APIs use DBus. So sometimes we find ourselves in a situation where we have to use REST (which is often just treated as another word for HTTP) to talk to certain parts of the system. For example Docker has a REST API.

For this tutorial you’ll need at least Cockpit 0.58. There was one last tweak that helped with the superuser option you see below. You can install it in Fedora 22 or build it from git.

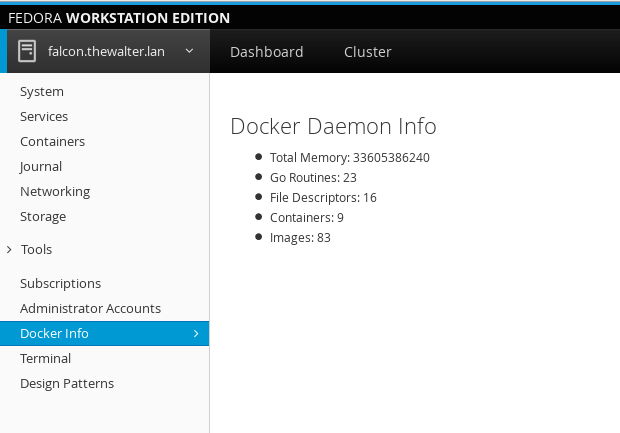

Here we’ll make a package called docker-info which shows info about the docker daemon. We use the /info docker API to retrieve that info.

I’ve prepared the docker-info package here. It’s just two files. To download them and extract to your current directory, and installs it as a Cockpit package:

$ wget http://cockpit-project.org/files/docker-info.tgz -O - | tar -xzf -

$ cd docker-info/

$ mkdir -p ~/.local/share/cockpit

$ ln -snf $PWD ~/.local/share/cockpit/

Previously we talked about how packages are installed, and what manifest.json does so I won’t repeat myself here. But to make sure the above worked correctly, you can run the following command. You should see docker-info listed in the output:

$ cockpit-bridge --packages

...

docker-info: .../.local/share/cockpit/docker-info

...

If you’re logged into Cockpit on this machine, first log out. And log in again. Make sure to log into Cockpit with your current user name, since you installed the package in your home directory. You should now see a new item in the Tools menu called Docker Info:

After a moment, you should see numbers pop up with some stats about the docker daemon. Now in a terminal try to run something like:

$ sudo docker run -ti fedora /bin/bash

You should see the numbers update as the container is pulled and started. When you type exit in the container, you should see the numbers update again. How is this happening? Lets take a look at the docker-info HTML:

<head>

<title>Docker Info</title>

<meta charset="utf-8">

<link href="../base1/cockpit.css" type="text/css" rel="stylesheet">

<script src="../base1/jquery.js"></script>

<script src="../base1/cockpit.js"></script>

</head>

<body>

<div class="container-fluid">

<h2>Docker Daemon Info</h2>

<ul>

<li>Total Memory: <span id="docker-memory">?</span></li>

<li>Go Routines: <span id="docker-routines">?</span></li>

<li>File Descriptors: <span id="docker-files">?</span></li>

<li>Containers: <span id="docker-containers">?</span></li>

<li>Images: <span id="docker-images">?</span></li>

</ul>

</div>

<script>

var docker = cockpit.http("/var/run/docker.sock", { superuser: "try" });

function retrieve_info() {

var info = docker.get("/info");

info.done(process_info);

info.fail(print_failure);

}

function process_info(data) {

var resp = JSON.parse(data);

$("#docker-memory").text(resp.MemTotal);

$("#docker-routines").text(resp.NGoroutines);

$("#docker-files").text(resp.NFd);

$("#docker-containers").text(resp.Containers);

$("#docker-images").text(resp.Images);

}

/* First time */

retrieve_info();

var events = docker.get("/events");

events.stream(got_event);

events.always(print_failure);

function got_event() {

retrieve_info();

}

function print_failure(ex) {

console.log(ex);

}

</script>

</body>

</html>

First we include jquery.js and cockpit.js. cockpit.js defines the basic API for interacting with the system, as well as Cockpit itself. You can find detailed documentation here.

<script src="../base1/jquery.js"></script>

<script src="../base1/cockpit.js"></script>

We also include the cockpit.css file to make sure the look of our tool matches that of Cockpit. The HTML is pretty basic, defining a little list where the info shown.

In the javascript code, first we setup an HTTP client to access docker. Docker listens for HTTP requests on a Unix socket called /var/run/docker.sock. In addition the permissions on that socket often require escalated privileges to access, so we tell Cockpit to try to gain superuser privileges for this task, but continue anyway if it cannot:

var docker = cockpit.http("/var/run/docker.sock", { superuser: "try" });

First we define how to retrieve info from Docker. We use the REST /info API to do this.

function retrieve_info() {

var info = docker.get("/info");

info.done(process_info);

info.fail(print_failure);

}

In a browser you cannot stop and wait until a REST call completes. Anything that doesn’t happen instantaneously gets its results reported back to you by means of callback handlers. jQuery has a standard interface called a promise. You add handlers by calling the .done() or .fail() methods and registering callbacks.

The result of the /info call is JSON, and we process it here. This is standard jQuery for filling in text data into the various elements:

function process_info(data) {

var resp = JSON.parse(data);

$("#docker-memory").text(resp.MemTotal);

$("#docker-routines").text(resp.NGoroutines);

$("#docker-files").text(resp.NFd);

$("#docker-containers").text(resp.Containers);

$("#docker-images").text(resp.Images);

}

And then we trigger the invocation of our /info REST API call.

/* First time */

retrieve_info();

Because we want to react to changes in Docker state, we also start a long request to its /events API.

var events = docker.get("/events");

The .get("/events") call returns a jQuery Promise. When a line of event data arrives, the .stream() callback in invoked, and we use it to trigger a reload of the Docker info.

events.stream(got_event);

events.always(print_failure);

function got_event() {

retrieve_info();

}

This is a simple example, but I hope it helps you get started. There are further REST javascript calls. Obviously you can also do POST and so on.

Cockpit's Kubernetes Dashboard

Here’s a video showing what I’ve been working on together with some help from a couple Cockpit folks. It’s a Cockpit dashboard for Kubernetes.

If you haven’t heard about Kubernetes … it’s a way to schedule docker containers across a cluster of machines, and take care of their networking, storage, name resolution etc. It’s not completely baked, but pretty cool when it works.

<iframe src=”http://www.youtube.com/embed/Fcfsu22RssU” html5=1 frameborder=”0” height=”450” width=”800” type=”text/html”> </iframe>

The Cockpit dashboard you see in the video isn’t done by any means … there’s a lot missing. But I’m pretty happy with what we have so far. I’m using Cockpit 0.61 in the demo. There are some nagging details to make things work, but hopefully we can solve them later. The Nulecule support isn’t merged yet, Subin has been working on it.

The Cockpit dashboard actually mostly works with Openshift v3. Openshift v3 is based on Kubernetes underneath, which makes apps that are developed there really portable.

Protocol for Web access to System APIs

Note: This post has been updated for changes in Cockpit 0.48 and later.

A Linux system today has a lot of local system configuration APIs. I’m not talking about library APIs here, but things like DBus services, command/scripts to be executed, or files placed in various locations. All of these constitute the API by which we configure a Linux system. In Cockpit we access these APIs from a web browser (after authentication of course).

How do we access the system APIs? The answer is the cockpit-bridge tool. It proxies requests from the Cockpit user interface, running in a web browser, to the system. Typically the cockpit-bridge runs as the logged in user, in a user session. It has similar permissions and capabilities as if you had used ssh to log into the system.

Lets look at an example DBus API that we call from Cockpit. systemd has an API to set the system host name, called SetStaticHostname. In Cockpit we can invoke that API using simple JSON like this:

{

"call": [

"/org/freedesktop/hostname1",

"org.freedesktop.hostname1",

"SetStaticHostname", [ "mypinkpony.local", true ]

]

}

The protocol that the web browser uses is a message based protocol, and runs over a WebSocket. This is a “post-HTTP” protocol, and isn’t limited by the request/response semantics inherent to HTTP. Our protocol has a lot of JSON, and has a number of interesting characteristics, which you’ll see below. In general we’ve tried to keep this protocol readable and debuggable.

The cockpit-bridge tool speaks this protocol on its standard in and standard output. The cockpit-ws process hosts the WebSocket and passes the messages to cockpit-bridge for processing.

Following along: In order to follow along with the stuff below, you’ll need at least Cockpit 0.48. The protocol is not yet frozen, and we merged some cleanup recently. You can install it on Fedora 21 using a COPR or build it from git.

Channels

Cockpit can be doing lots of things at the same time and we don’t want to have to open a new WebSocket each time. So we allow the protocol to be shared by multiple concurrent tasks. Each of these is assigned a channel. Channels have a string identifier. The data transferred in a channel is called the payload. To combine these into a message I simply concatenate the identifier, a new line, and the payload. Lets say I wanted to send the message Oh marmalade! over the channel called scruffy the message would look like this:

scruffy

Oh marmalade!

How do we know what channel to send messages on? We send control messages on a control channel to open other channels, and indicate what they should do. The identifier for the control channel is an empty string. More on that below.

Framing

In order to pass a message based protocol over a plain stream, such the standard in and standard out of cockpit-bridge, one needs some form of framing. This framing is not used when the messages are passed over a WebSocket, since WebSockets inherently have a message concept.

The framing the cockpit-bridge uses is simply the byte length of the message, encoded as a string, and followed by a new line. So Scruffy’s 21 byte message above, when sent over a stream, would like this:

21

scruffy

Oh marmalade!

Alternatively, when debugging or testing cockpit-bridge you can run in an interactive mode, where we frame our messages by using boundaries. That way we don’t have to count the byte length of all of our messages meticulously, if we’re writing them by hand. We specify the boundary when invoking cockpit-bridge like so:

$ cockpit-bridge --interact=----

And then we can send a message by using the ---- boundary on a line by itself:

scruffy

Oh marmalade!

----

Control channels

Before we can use a channel, we need to tell cockpit-bridge about the channel and what that channel is meant to do. We do this with a control message sent on the control channel. The control channel is a channel with an empty string as an identifier. Each control message is a JSON object, or dict. Each control message has a "command" field, which determines what kind of control message it is.

The "open" control message opens a new channel. The "payload" field indicates the type of the channel, so that cockpit-bridge knows what to do with the messages. The various channel types are documented. Some channels connect talk to a DBus service, others spawn a process, etc.

When you send an "open" you also choose a new channel identifier and place it in the "channel" field. This channel identifier must not already be in use.

The "echo" channel type just sends the messages you send to the cockpit-bridge back to you. Here’s the control message that is used to open an echo channel. Note the empty channel identifier on the first line:

{

"command": "open",

"channel": "mychannel",

"payload": "echo"

}

Now we’re ready to play … Well almost.

The very first control message sent to and from cockpit-bridge shuld be an "init" message containing a version number. That version number is 1 for the forseeable future.

{

"command": "init",

"version": 1

}

Try it out

So combining our knowledge so far, we can run the following:

$ cockpit-bridge --interact=----

In this debugging mode sent by cockpit-bridge will be bold in your terminal. Now paste the following in:

{ "command": "open", "channel": "mychannel", "payload": "echo" }

----

mychannel

This is a test

----

You’ll notice that cockpit-bridge sends your message back. You can use this tecnique to experiment. Unfortunately

cockpit-bridge exits immediately when it’s stdin closes, so you can’t yet use shell redirection from a file effectively.

Making a DBus method call

To make a DBus method call, we open a channel with the payload type "dbus-json3". Then we send JSON messages as payloads inside that channel. An additional field in the "open" control message is required. The "name" field is the bus name of the DBus service we want to talk to:

{

"command": "open",

"channel": "mydbus",

"payload": "dbus-json3",

"name": "org.freedesktop.systemd1"

}

Once the channel is open we send a JSON object as a payload in the channel with a "call" field. It is set to an array with the DBus interface, DBus object path, method name, and an array of arguments.

mydbus

{

"call": [ "/org/freedesktop/hostname1", "org.freedesktop.hostname1",

```unknown

"SetStaticHostname", [ "mypinkpony.local", true ] ],

"id": "cookie"

}

If we want a reply from the service we specify an `"id"` field. The resulting `"reply"` will have a matching `"id"` and would look something like this:

<pre>

mydbus

{

"reply": [ null ],

"id": "cookie"

}

</pre>

If an error occured you would see a reply like this:

<pre>

mydbus

{

"error": [

```unknown

"org.freedesktop.DBus.Error.UnknownMethod",

[ "MyMethodName not available"]

],

"id":"cookie"

}

</pre>

This is just the basics. You can do much more than this with DBus, including watching for signals, lookup up properties, tracking when they change, introspecting services, watching for new objects to show up, and so on.

Spawning a process

Spawning a process is easier than calling a DBus method. You open the channel with the payload type "stream" and you specify the process you would like to spawn in the "open" control message:

{

"command": "open",

"channel": "myproc",

"payload": "stream",

"spawn": [ "ip", "addr", "show" ]

}

The process will send its output in the payload of one or more messages of the "myproc" channel, and at the end you’ll encounter the "close" control message. We haven’t looked at until now. A "close" control message is sent when a channel closes. Either the cockpit-bridge or its caller can send this message to close a channel. Often the "close" message contains additional information, such as a problem encountered, or in this case the exit status of the process:

{

"command": "close",

"channel": "myproc",

"exit-status": 0

}

Doing it over a WebSocket

Obviously in Cockpit we send all of these messages from the browser through a WebSocket hosted by cockpit-ws. cockpit-ws then passes them on to cockpit-bridge. You can communicate this way too, if you configure Cockpit to accept different Websocket Origins.

And on it goes

There are payload types for reading files, replacing them, connecting to unix sockets, accessing system resource metrics, doing local HTTP requests, and more. Once the protocol is stable, solid documentation is in order.

I hope that this has given some insight into how Cockpit works under the hood. If you’re interested in using this same protocol, I’d love to get feedback … especially while the basics of the protocol are not yet frozen.

Cockpit on RHEL Atomic Beta

If you’ve tried out the RHEL Atomic Host Beta you might notice that Cockpit is not included by default, like it is in the Fedora Atomic or CentOS Atomic. But there’s an easy work around:

$ sudo docker run --privileged -v /:/host -d stefwalter/cockpit-atomic:wip

This is an interim solution, and has some drawbacks:

- Only really allows Cockpit to be used as root.

- The Storage and user accounts UIs don’t work.

And in general privileged containers are a mixed bag. They’re not portable, and really not containers at all, in the sense that they’re not contained. But this is an easy way to get Cockpit going on RHEL Atomic for the time being.